Previously we looked at scraping data from the web by example, where the website paginated the data. That is, it spread the results over multiple pages. This week I’m going to show you how you can automatically scrape data from multiple web pages with Power Query using a custom function.

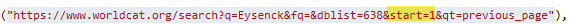

This approach works with URLs that have a predictable structure. Looking at the URL from the WorldCat website used in the previous post we can see in the image below there is a reference for the ‘start’ number highlighted in yellow:

This start number is referring to the first record of 10 returned on the page. As we move through the pages of results the start number in the URL increments by 10.

Note: Not all URLs will follow this pattern. Some might use a sequential numbering system that refers to the page number, others might use text strings that relate to different regions etc. Either way, the process is virtually the same.

Download Power BI Desktop File

Enter your email address below to download the sample workbook.

Watch the Video

Steps for Automating Scraping of Data from Multiple Web Pages

There are 4 steps required to scrape data from multiple web pages with Power Query. We did the first step in the previous post where we created a query that returns the first page of results.

In this post we’re going to look at the next 3 steps:

- Convert the query to a function that can be executed for each page of results

- Generate a list of page start numbers

- Execute the function for each page start number

Step 1: Convert Query to a Function

Starting off where we left the last example, we need to go to the Query Editor Home tab and open the Advanced Editor. Here we see the M code Power Query wrote for us.

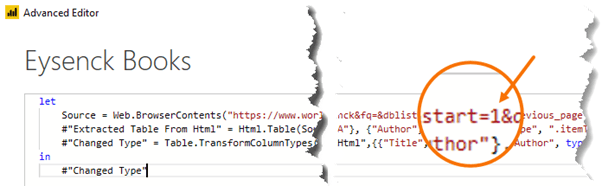

We’re only interested in the first row that contains the URL for the web page. In this URL is the start number of the first record being returned on the page. You can see it magnified in the image below:

Each page returns 10 results, therefore the URL for the second page will have a start number of 11, and page 3 will have a start number of 21 and so on. We need to replace this hard-keyed value with a variable and convert the query to a function.

To convert the query to a function we add a line of code at the top for the variable name:

(PageStart as text) =>

I’ve called the variable ‘PageStart’. Then we replace the 1 in the URL with the variable name. Notice you need to append it with a double quote and ampersand on either side:

Important: Power Query is case sensitive therefore you must enter your variable name with the case that matches the variable name. e.g. pagestart would return an error.

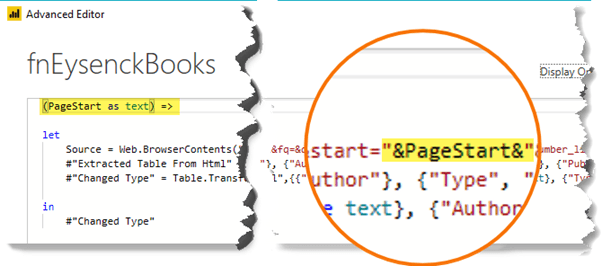

When you click ‘Close’ on the Advanced Editor you’ll see that the query is now converted to a function. I like to give my functions a name prefixed with ‘fn’ to differentiate them from other queries, as you can see in the Properties below:

Step 2: Generate Page Start Numbers

For this website we need a series of numbers for the variable which we’ll generate using Power Query, but other websites might use text strings. If so, you can import a table that contains your list of text string variables.

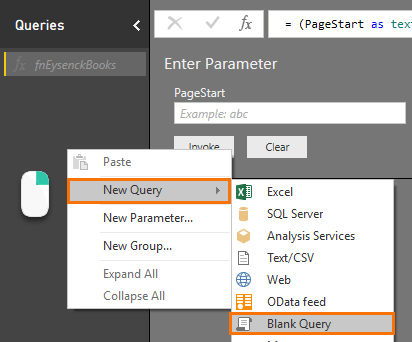

Ok, we’ll create a new query to automatically generate the list of page start numbers. Right-click in the queries pane > New Query > Blank Query:

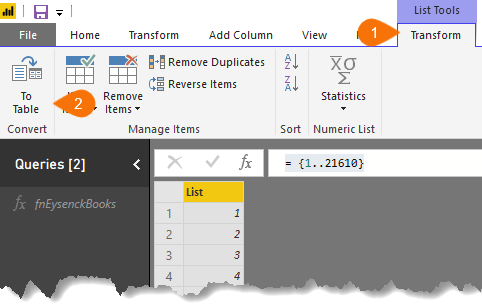

In the formula bar we’ll generate a list of numbers 1 through to 21610 with the following formula:

= {1..21610}

This will return a list that we can convert to a table: List Tools Transform tab > To Table:

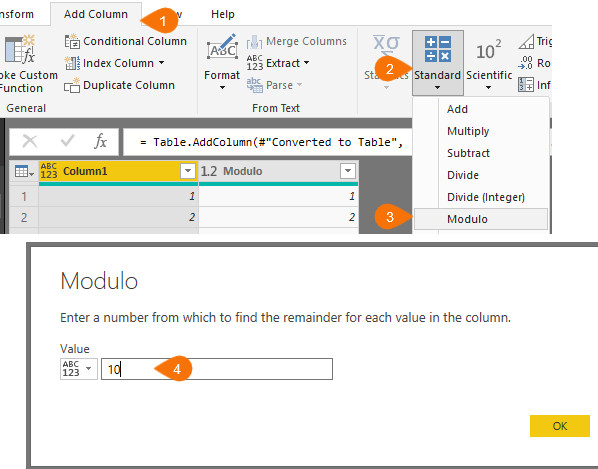

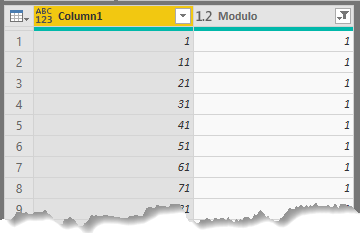

Add a Modulo column to detect every 10th number in the list (remember we only need the page start number and there are 10 records per page):

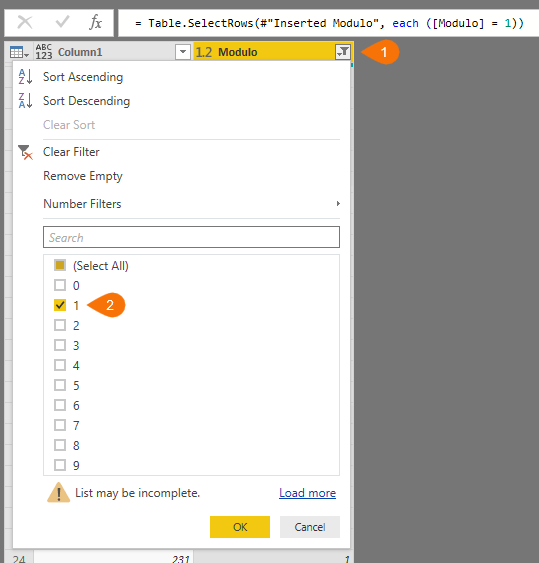

Filter the Modulo column for rows containing 1:

This will leave us with a list of PageStart numbers in Column1 that we need for our variable:

The Modulo column has done its job, so you can click on the column header and press the Delete key to get rid of it.

Now, double click the header for ‘Column1’ and give it a proper name. I called mine ‘PageStartNumbers’.

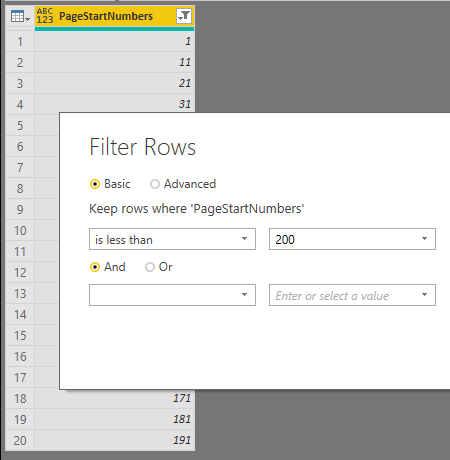

We now have 2,161 page start numbers! Loading 2,161 web pages to scrape their data will take a very long time, so for the purpose of this example I’m going to filter them to the first 20 with a Filter for numbers less than 200:

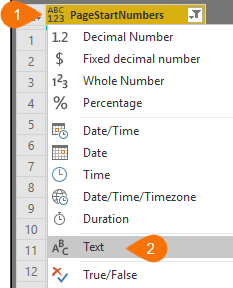

Next, I need to change the data type for the PageStartNumbers column to text, as it’ll be inserted into the URL, which is a text string. Click on the ABC123 icon in the left of the column header > Text:

Now we’re ready to invoke the custom function we created in step 1.

Step 3: Invoke Custom Function

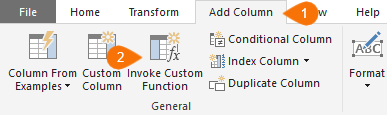

Add Column tab > Invoke Custom Function:

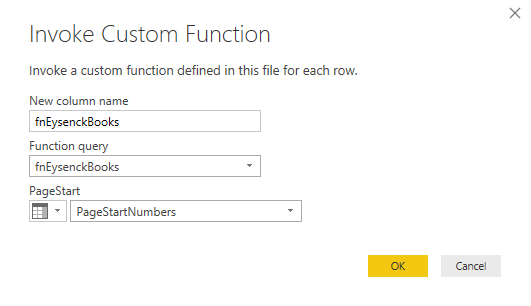

At the Invoke Custom Function dialog box select the function from the Function query list and the PageStartNumbers column for the PageStart variable:

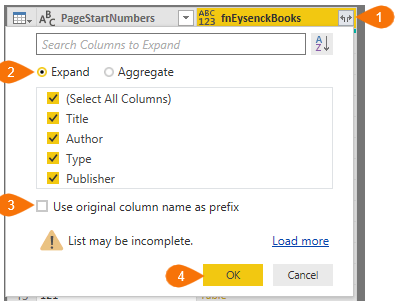

Now we can expand the columns: click on the double headed arrow in the fnEysenckBooks column > Expand. Be sure to deselect ‘Use original column name as prefix’:

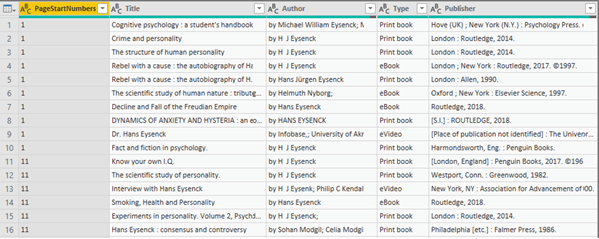

You should see a list of books with title, author, type and publisher information.

You can delete the ‘PageStartNumbers’ column as this is no longer needed.

One of the great things about Power Query is that it maintains a connection to the web page which enables you to refresh the connection and get updates as needed.

Learn Power Query

If you’d like to learn more of Power Query’s wonders, please consider my Power Query Course. Or check out what Power BI has to offer, in my Power BI Course.

Hi Mynda,

Could you please advise how to get data from multiple pages on a website – no page # in URL

Hi Soumya,

It depends on how the pages are loaded. But if no page numbers are provided in the URL, the site may be using JavaScript to load the pages, and that can’t be scraped with Power Query.

If you can provide the site URL I can check.

Phil

How can you modify the provided Power Query function to accommodate websites that use a sequential numbering system to represent page numbers, rather than a consistent increment by 10, and adjust the list of page start numbers accordingly for such websites?

It’s even easier if the page numbers are sequential. Simply stop at the point where you create the list of numbers. i.e. don’t do the Add a Modulo step onwards. If you get stuck, please post your question on our Excel forum where you can also upload a sample file and someone can help you further.

Hi,

thank you for this post, very useful and the only one I could follow to get my API data from all pages.

I have a question, as I expanded my columns after invoking custom function below row 208 I get an error “We cannot convert the value “” to type Table.” I checked and it seems there is an empty cell in one of the columns in the table… How can I fix this? None of the rows that should come after row 208 won’t load.

Thank you in advance for your response!

You can add a try otherwise clause to the custom function that handles the error. Bit hard to explain here.

Hi agan and thank you for your response. I tried doing as suggested but can’t get it to work. I understand with my function the problem appears when on expand steps, as there are some null values. Here are few lines of this part of first query that I turned into function – could you please give me suggestion of how to apply try..otherwise for one of these steps?

#”Expanded projectComposition” = Table.ExpandTableColumn(Table0, “projectComposition”, {“leadResearchOrganisation”, “personRoles”, “project”}, {“projectComposition.leadResearchOrganisation”, “projectComposition.personRoles”, “projectComposition.project”}),

#”Expanded projectComposition.leadResearchOrganisation” = Table.ExpandTableColumn(#”Expanded projectComposition”, “projectComposition.leadResearchOrganisation”, {“name”}, {“leadResearchOrganisation.name”}),

#”Expanded projectComposition.personRoles” = Table.ExpandTableColumn(#”Expanded projectComposition.leadResearchOrganisation”, “projectComposition.personRoles”, {“personRole”}, {“projectComposition.personRoles.personRole”}),

Hi Edyta,

Please see this tutorial on Try Otherwise.

Mynda

Hi Mynda,

how can I get data from a website that needs to be logged in, in Excel by PowerQuery ?

Usually it’s very difficult, especially if the website requires a form to be filled in. I don’t have an easy answer.

Dear! Thank you so much for this video.

Could you please share with us how can we do the following:

I have a web based database that is constantly fed. Today it has 300 itens and 15 itens per page, so: 20 pages. But next week, this database may have 600 itens, and due that, 40 pages. How can I automate the function to identify the total number of the pages each time it acesses the web data source?

Thank you!

Hi Rodrigo,

So your system has no way of checking how any items/pages there will be? It really should have some way to do this, or at least provide you with information in each response (page) to indicate that there is more data yet to be retrieved.

You’ll need to examine the response from the database each time you send a request. I don’t know what it sends you but I would expect that it either has a field/record that tells you there is more data to get,or it indicates that there is no more data, either by explicitly indicating this, or by the system generating an error to indicate the end of data.

Without knowing how your system works I can’t be specific but these are the ways it should work.

If you can provide examples of your system’s responses then I can assist further. Please start a topic on our forum and attach these along with your query.

Regards

Phil

I would expect the system would tell you

I am trying to extract the directory from this website https://www.kornferry.com/about-us/consultants?initial=A. There is a page click option at the bottom of the website.

(PageStart as text)=>

let

Source = Web.BrowserContents(“https://www.kornferry.com/about-us/consultants?initial=”&PageStart&”),

#”Extracted Table From Html” = Html.Table(Source, {{“Column1”, “H3 *”}, {“Column2”, “.article-item-back H3”}, {“Column3”, “.btn-arrow”}}, [RowSelector=”.isotope-item”]),

#”Changed Type” = Table.TransformColumnTypes(#”Extracted Table From Html”,{{“Column1”, type text}, {“Column2”, type text}, {“Column3″, type text}})

in

#”Changed Type”

I am getting the token comma expected error and it shows right at “Extracted Table” area.

Q1: I could not find the error, could not find out where the error is

Q2: I am able to extract data one page at a time but for multiple pages, i am missing something.

Thanks in advance for any assistance

Hi Jay,

I think the URL is wrong:

Source = Web.BrowserContents(“https://www.kornferry.com/about-us/consultants?initial=”&PageStart&”),

Should be:

Source = Web.BrowserContents(“https://www.kornferry.com/about-us/consultants?initial=”&PageStart),

Hi Jay,

That directory is displayed using JavaScript so you won’t be able to get it using Power Query. PQ can’t interact with dynamic page elements like that.

You’d need to use something like Selenium/VBA that can actually send clicks to the page to update it

https://www.myonlinetraininghub.com/web-scraping-filling-forms

Regards

Phil

I’m curious about how you would set this up for Power BI refreshes online. When I applied these steps and then published I got an error message about how Power BI service cannot refresh dynamic datasets. This is due to the URL being dependent on the parameter I guess. I came across this blog post.

https://blog.crossjoin.co.uk/2016/08/16/using-the-relativepath-and-query-options-with-web-contents-in-power-query-and-power-bi-m-code/

However, I can’t use the [RelativePath] field within Web.BrowserContents() M function, which I believe is what is needed for “Web by Examples.” It has to be the Web.Contents() option. Unfortunately there are slight disparities when I switch the function.

Hi Ben,

Have you seen this post which talks about a workaround using Chris Webb’s technique. I hope that helps you overcome the problem.

Mynda

That’s basically what I did but it required me to change the source to Web.Contents as opposed to Web.BrowserContents(). It caused a very slight discrepancy in the results when I made the switch. 99% of it was the same based on what I could see. Can you do web by examples with Web.Contents()?

Hi Ben, Web.BrowserContents returns the HTML for the URL as viewed by a web browser, whereas Web.Contents returns the contents downloaded as binary. I guess this difference is enough to cause the discrepancy. You can try using Get Data > From Web and entering the URL to see if building it from scratch helps. Mynda

Hi Mynda,

Thank you for the content. Very useful! I will apply on some projects.

On the project I’m working right now, I have a situation that cannot apply this and I’m not finding a good solution, maybe you have one.

https://www.investing.com/economic-calendar/michigan-consumer-sentiment-320

I am trying to export data from this website. The problem is that when you open the page, you have 6 values appearing, and when you update next month the last value will disappear, so you lost the historical data. If you click show more you’ll have the historical ones saved, but I cannot access them using Power Query. I check and nothing related with the page number appears on advanced editor.

Do you have any suggestion?

Thank you a lot!

Hi Maria, It sounds like you’ll have to take a copy of the data before refreshing for the current month. You could try automating the copy and paste with VBA. Mynda

Works like a charm. Thanks a ton for this article. Really helpful. I’m a complete newbie and yet was able to understand this easily.

A couple of related questions: If I need two variables instead of one (say product ID and page numbers, instead of just the page numbers), will this work?

Secondly, if the above is possible I need to specify page numbers based on Product IDs (both of which can be derived from the query and response), will it be possible? For instance: Product 1234 should navigate through 12 pages and Product 4567 should navigate through 20 pages and so on.

TIA,

Sukesh

Hi Sukesh, I’m glad you found this tutorial helpful. I’m sure you can provide multiple variables. You will need to generate them as one text string to feed into the URL, so you may need to concatenate other characters as required.

Hi, thanks for a wonderful explanation.

It worked fine for some sites.

However, for a specific site I need to import data it won´t work,

Could you help me ?

https://digitarq.advis.arquivos.pt/details?id=1265252

https://digitarq.advis.arquivos.pt/details?id=1265253

…. and so on

Thank you so much

Hi Jose,

It only works on web pages that contain tables inside of HTML table tags. I can’t see any tables on these pages.

Mynda

Ah, Ok,

Thanks !

Hi Jose,

Perhaps scraping the pages using VBA would work for you:

https://www.myonlinetraininghub.com/web-scraping-multiple-pages

https://www.myonlinetraininghub.com/web-scraping-filling-forms

Regards

Phil

Hi, thanks for a great post! I’m not experienced with power query, but worked through your example and got it to work 🙂

Would it be possible to apply this to substitute several parts of an url? I.e to automate retrieving google search results with a list of search terms and i.e. five first pages of results? Any tips on how to approach this?

Hi Eric,

All you have to do is to add as many parameters you need in the function arguments:

(PageStart as text, Param1 as text, Param2 as text, Param3 as text)=>

Then use those parameters in URL construction:

URL=”x.com?p1=”&Param1&”&p2=”&Param2&”&p3=”&Param3

Thanks a lot! Works like a charm. I used it with Instagram API to iterate througn every media_id. I hope my comment would help others to find this article.

Great to know, Alex!

Hi There,

Thank you for the great post.

I have a similar situation but my API has a date field (yyyymmdd) and data changes with respect to the date.Is there any way that i can use custom date selection option and the data changes w.r.t the date. I tried the method you suggested above but this method brings all the data for the number of dates provided by me in the advance query (while creating a blank query).

Thanks in advance

Hi,

You can create a table with 1 column, 1 row, and type there the date you want.

In power query, you will use:

CustomDate = Excel.CurrentWorkbook(){[Name=”DateTableName”]}[Content]{0}[ColumnName]

You will be able to use this CustomDate parameter in the rest of the query.

Hi Catalin,

Thank you very much for your help. The above steps provided by you work very well for a single API. However, I have multiple API like below. When I try to use the 2nd API with the same CustomDate, its not working properly.

Ex: The below API has 1440 rows/day, when the 1st API is loaded its works fine but when the 2nd API is loaded, it is repeating the 1st row 1440 times and goes to the next 2nd row, repeats it 1440 times and so on. I’m not sure what I’m doing wrong here, can you please help me solve this issue.

API Format:

https://xyz.com/API/bike/dat/20191101/211_steer_1.json?

https://xyz.com/API/bike/dat/20191101/211_bell_3.json?

https://xyz.com/API/bike/dat/20191101/211_tire_1.json?

Code from Advanced editor is as follows:

let

Source = Excel.CurrentWorkbook(){[Name=”pick”]}[Content]{0},

#”Converted to Table” = Record.ToTable(Source),

#”Changed Type” = Table.TransformColumnTypes(#”Converted to Table”,{{“Value”, type text}}),

#”Invoked Custom Function” = Table.AddColumn(#”Changed Type”, “fn211_steer_1″, each fn211_steer_1([Value])),

#”Expanded fn211_steer_1″ = Table.ExpandTableColumn(#”Invoked Custom Function”, “211_steer_1”, {“Column1.m64”, “Column1.m6”, “Column1.ts”},

{“211_steer_1.Column1.m64”, “211_steer_1.Column1.m6”, “211_steer_1.Column1.ts”}),

#”Invoked Custom Function1″ = Table.AddColumn(#”Expanded 211_steer_1″, “211_bell_3″, each 211_bell_3([Value])),

#”Expanded 211_bell_3″ = Table.ExpandTableColumn(#”Invoked Custom Function1”, “211_bell_3”, {“Column1.m64”, “Column1.m6”, “Column1.ts”},

{“211_bell_3.Column1.m64”, “211_bell_3.Column1.m6”, “211_bell_3.Column1.ts”})

in

#”Expanded 211_bell_3″

Thank you for your help in advance.

You are using custom functions, I have no idea what those custom functions do.

Take a look into a cell in column “fn211_steer_1″ from #”Invoked Custom Function” step. In that cell there should be a table produced by your custom function.

If that table has more than 1 row, your next step to expand that column will also expand into rows, not just into columns.

You should use our forum to upload sample files if you need more help.

Thanks Mynda, great article!

This worked out well for me, except that it crashes every time when the page number does not exist.

I tried a little to find out how to use error handling in PQ, but so far I didn´t find anything useful.

If it doesn’t find the requested page, I want the function to move on to next in the list.

Hi Martin,

Error handling in Power Query is done with ‘try’ ‘otherwise’. This video by Miguel Escobar might give you some ideas.

Mynda

Thank you Mynda. Much appreciated.

Ow Man, this is the best example of web scraping with power bi I have found on internet ever. Thank you very much!!

Thanks for your kind words, Eduardo 🙂 Glad it was helpful.

Hi Mynda,

Seems there is a typo in the content:

(StartPage as text) =>

Should it be

(PageStart as text) =>

Well spotted, MF! Thanks. I’ve fixed it now.

Hi Mynda,

Thanks a lot for your quick response.

Best regards,

Julian

Hi Mynda,

For some websites, it’s a must to go through each page by manual to reach the last page. Is a way to automatically detect how many pages in a total then put that number in the query run?

Best regards,

Julian Chen

Hi Julian,

I’m not aware of any way to automate this. Usually the web page will have a ‘jump to end’ button. Sometimes it’s designated with two “>>”.

Mynda

Hi Mynda,

Great post, but I would like to combine both your last blogs and being able to scrape from example and to invoke a function. Now I have invoked a function, but the data retrieved is not correct, since it does not have an example which gives power BI the necessary data to create the desired output.

I am sure it should be possible to do so, I just do not see how.

Than you and kind regards,

Hi Andres,

If you download the Power BI file for this tutorial you’ll see that it does combine both get data by example and then invoking the function.

Mynda