Do you have courses that would help with the topic of using Power BI Aggregation for finding the right balance of data load/refresh vs. query performance when using large datasets? One of the new data sources I am working on has hundreds of millions of rows.

Techniques I learned for smaller datasets so not work. It has forced me to experiment with DirectQuery, which gives slow query times, but refresh/load is not an issue. Using Import data mode produces fast queries, but with unacceptably long load and refresh times.

I investigated Incremental Refresh, but my workspace does not support the Premium Service required to use that feature.

Most recently, I discovered the Aggregation feature that supports a mix of Direct Query for detailed data, and Import for summarized/aggregated data. My problem is that I'm struggling with how to produce the aggregated tables from the detailed data.

Thanks,

Larry

Hi Larry,

Great question. I don't specifically cover tricks for working with big data in my course.

You could try Table.Buffer and see if that helps. Just wrap Table.Buffer around your Source function like so:

Table.Buffer(Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content]),

Mynda

Hi Mynda,

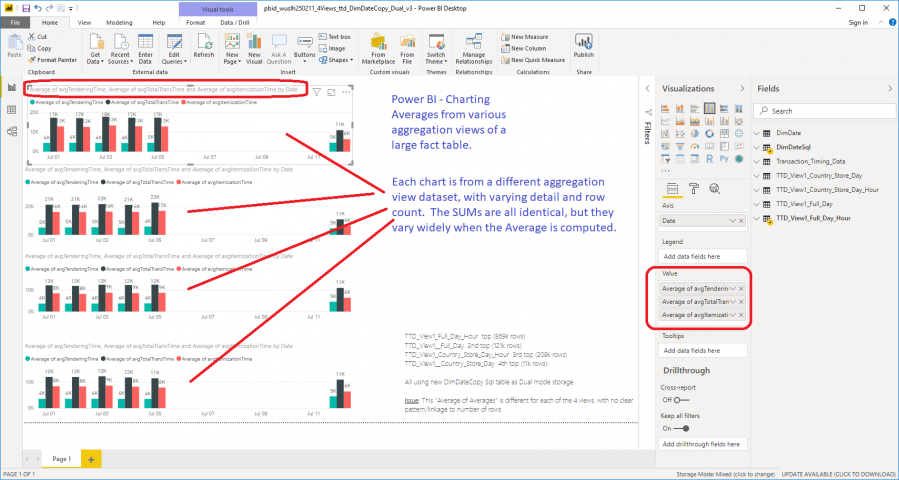

I am having trouble with consistent results for calculating the average values of summary/aggregation tables derived from a large direct query fact table.

I provided detail in the attached Word document (which lets me use Vi editor) and Power BI screen capture.

Any suggestions for a better way to calculate averages that would be more consistent across the various summary views of the fact table?

Thanks,

Larry

Hi Larry,

Can you please start a new thread for the question above as it's a different topic.

Thanks for understanding.

Mynda